The $100 Billion Problem Nobody Talks About

Imagine a self-driving car misreading a stop sign as a speed limit sign. Picture a medical AI misdiagnosing cancer because someone manipulated a single pixel in an X-ray image. Consider a financial fraud detection system that’s been secretly trained to ignore specific attack patterns.

These aren’t science fiction scenarios, they’re documented vulnerabilities in today’s AI systems that could cost organizations billions and put lives at risk. Yet most cybersecurity professionals lack the specialized knowledge to defend against these emerging threats.

The reality is stark: As AI systems become the backbone of critical infrastructure, a dangerous skills gap is widening between traditional cybersecurity expertise and the unique challenges of securing artificial intelligence.

A Revolutionary Solution Emerges

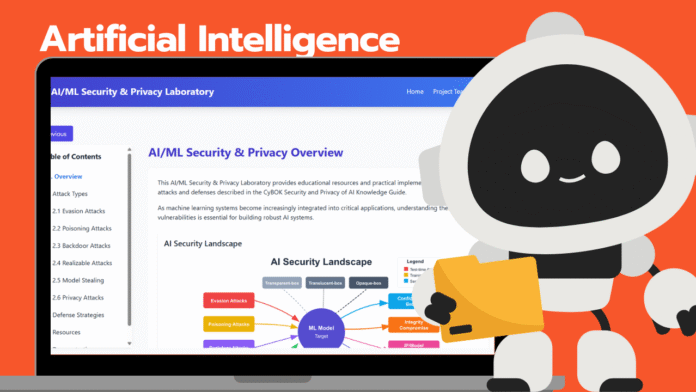

At Anglia Ruskin University, a pioneering educational initiative is addressing this critical shortage head-on. The CyBOK AI/ML Security Laboratory project, now freely accessible at secai.uk, represents the most comprehensive hands-on AI security training platform ever developed for educational use.

This isn’t just another online course, it’s a complete adversarial machine learning laboratory that gives students direct experience with the same attack techniques threatening real-world systems today.

The Visionary Leadership Behind the Innovation

Dr. Hossein Abroshan, Course Leader for MSc Cyber Security and Director of the Security, Networks, and Applications Research Group (CAN), recognized that traditional cybersecurity education was failing to address AI-specific vulnerabilities. His solution? Create an immersive, practical learning environment where students can safely explore both offensive and defensive AI security techniques.

“As machine learning systems become increasingly integrated into critical applications, understanding their security vulnerabilities is essential for building robust AI systems.” – CyBOK AI/ML Security Laboratory

The project bridges the critical gap between academic theory and real-world application, ensuring graduates can immediately contribute to securing AI systems in production environments.

What Makes This Laboratory Unprecedented

Comprehensive Attack Simulation Environment

The platform provides unprecedented access to real attack scenarios through secai.uk, featuring:

Live Vulnerability Demonstrations: Students work with intentionally vulnerable AI models that mirror weaknesses found in production systems. These aren’t academic toy problems, they represent genuine security challenges organizations face today.

Professional-Grade Attack Implementations: The laboratory includes cutting-edge attack techniques with documented success rates:

- Fast Gradient Sign Method (FGSM) attacks achieving 87% success rates

- Model stealing techniques replicating proprietary systems with 90%+ accuracy

- Backdoor attacks that maintain normal performance while hiding malicious triggers

- Privacy attacks that extract sensitive training data information

Hands-On Learning Architecture

Interactive Jupyter Notebook Collection: Over twelve comprehensive notebooks guide students through each attack type, featuring:

- Step-by-step implementation guides

- Professional code examples

- Visual attack demonstrations

- Quantified impact assessments

Automated Environment Setup: Simple scripts handle all technical dependencies, allowing students to focus on learning rather than configuration challenges.

Video-Enhanced Learning: Professional demonstrations accompany each technique, making complex concepts accessible across different learning styles.

The Complete Threat Landscape: What Students Master

Advanced Adversarial Techniques

Evasion Attacks: The Invisible Threat

Students discover how minimal input manipulations can compromise sophisticated AI systems. Through hands-on implementation of FGSM, PGD, and Carlini & Wagner attacks, they learn how adversaries can achieve high success rates while maintaining imperceptible changes to human observers.

Data Poisoning & Backdoor Attacks: The Silent Sabotage

The curriculum reveals how attackers embed malicious functionality during the training phase. Students implement attacks that create models performing normally on clean data while responding to hidden triggers with predetermined malicious outputs—a technique particularly dangerous because it bypasses traditional testing.

Realizable Attacks: Real-World Vulnerabilities

Unlike academic exercises operating in theoretical feature spaces, these attacks use practical transformations like lighting variations, rotation, and physical modifications. Students learn why these attacks represent the most serious threat to deployed AI systems.

Model Stealing: Intellectual Property Theft

Through systematic API querying techniques, students discover how proprietary AI models can be replicated with minimal access. The laboratory demonstrates methods achieving over 90% accuracy in model replication, representing a significant intellectual property and competitive advantage threat.

Privacy Attacks: Data Extraction Techniques

Students implement membership inference, model inversion, and attribute inference attacks that extract sensitive information from AI models, understanding how seemingly secure deployments can leak confidential training data.

Robust Defense Implementation

Adversarial Training Methodologies: Students learn to incorporate attack examples during training to improve model robustness against evasion attempts.

Data Sanitization Techniques: Implementation of detection and removal strategies for malicious training samples, preventing poisoning and backdoor attacks.

Differential Privacy Implementation: Mathematical frameworks providing quantifiable privacy guarantees for sensitive training data.

Ensemble Defense Strategies: Multi-model approaches that enhance security through diversification and consensus mechanisms.

Industry Impact: Where Graduates Make a Difference

Financial Services Transformation

Graduates secure fraud detection systems against adversarial attacks that could enable sophisticated financial crimes to bypass security controls, protecting billions in assets.

Healthcare System Protection

Students learn to defend medical AI systems against attacks that could manipulate diagnostic outputs, ensuring patient safety and maintaining trust in AI-assisted healthcare.

Autonomous System Security

The curriculum addresses critical vulnerabilities in self-driving vehicles, drones, and industrial automation where security failures have immediate physical safety implications.

Enterprise AI Governance

Graduates develop expertise in assessing and securing the expanding array of AI tools across organizations, from customer service systems to supply chain optimization platforms.

Technical Excellence and Academic Rigor

Scalable Educational Architecture

The platform accommodates diverse learning environments, from individual study on basic laptops to classroom deployments on high-performance computing systems with GPU acceleration.

Industry-Standard Methodology

Students gain proficiency with professional tools and frameworks including TensorFlow, PyTorch, and specialized adversarial ML libraries used by leading security teams globally.

Realistic Assessment Scenarios

The environment provides both black-box API testing and white-box analysis capabilities, preparing students for the full spectrum of professional security assessment challenges.

Rigorous Quality Assurance

Continuous evaluation ensures educational effectiveness through systematic student feedback across usability, comprehensibility, and learning impact metrics, maintaining consistently high satisfaction scores above 3.0/5.0.

Global Accessibility and Open Innovation

Universal Access Initiative

The complete laboratory environment operates as a public resource at secai.uk, eliminating financial barriers to advanced AI security education globally.

Comprehensive Resource Library

- Automated installation and configuration scripts

- Complete Jupyter notebook collection for offline use

- Full source code repository access

- Extensive educator documentation and support materials

Open Government Licensing

Operating under Crown Copyright with Open Government Licence ensures these critical educational resources remain freely available to the global cybersecurity community permanently.

Measurable Impact and Future Security

The CyBOK AI/ML Security Laboratory project represents more than educational innovation, it’s a strategic investment in global AI security infrastructure. By providing comprehensive, hands-on training in both offensive and defensive AI security techniques, the platform ensures the next generation of cybersecurity professionals can protect the AI systems increasingly central to modern society.

As artificial intelligence becomes more pervasive across critical infrastructure, healthcare, finance, and transportation, the security professionals trained through this platform will serve as the first line of defense against sophisticated AI-targeted attacks.

Begin Your AI Security Journey Today

The future of cybersecurity lies in understanding artificial intelligence vulnerabilities and defenses. Whether you’re a student, educator, or security professional, the comprehensive resources available at secai.uk provide everything needed to develop expertise in this critical field.

Access the complete laboratory environment today and join the global community building more secure AI systems for tomorrow.

About the Project

The CyBOK AI/ML Security Laboratory project operates under funding from the Cyber Security Body of Knowledge initiative, representing collaborative efforts to advance cybersecurity education globally. Licensed under the Open Government Licence, Crown Copyright, The National Cyber Security Centre 2025.